Most conversations about AI at work still live safely in the future tense. We talk in "2026 targets" and "eventual disruptions," a framing that feels comfortable because it keeps the responsibility deferred. But yesterday, I walked into a room where that comfort had evaporated.

I met a leadership team that has already crossed the line many are still debating: they put an AI agent on the official company org chart. It wasn't a metaphor or a branding gimmick. There was a box, nestled between two human names, with the title: Senior Associate.

It has a corporate identity, a dedicated inbox, and defined KPIs. More importantly, those KPIs are being met—and in some cases, exceeded.

What struck me most wasn't the technical sophistication of the system, but how unremarkable it had become to the people working alongside it. It wasn't a "tool" they used; it was a colleague they relied on. This isn't just a fun piece of office theater; it’s an operational reality that our current risk models are completely unprepared for.

The easy reaction is to dismiss this as a branding exercise. But that misses the functional shift entirely. We have to stop asking if AI is "smart" and start asking if it is labor.

There is a simple test to tell the difference: If the system stops working tomorrow, does a workflow break, or does the work stop?

When a tool like a calculator or a CRM fails, a human picks up the task manually. It’s slow and frustrating, but continuity holds. When labor fails—human or otherwise—the role goes unfilled. Projects stall, outcomes vanish, and the org chart is left with a gaping hole.

The moment you are operationally dependent on a system to perform a role, you’ve created liability, whether your insurance broker knows it or not.

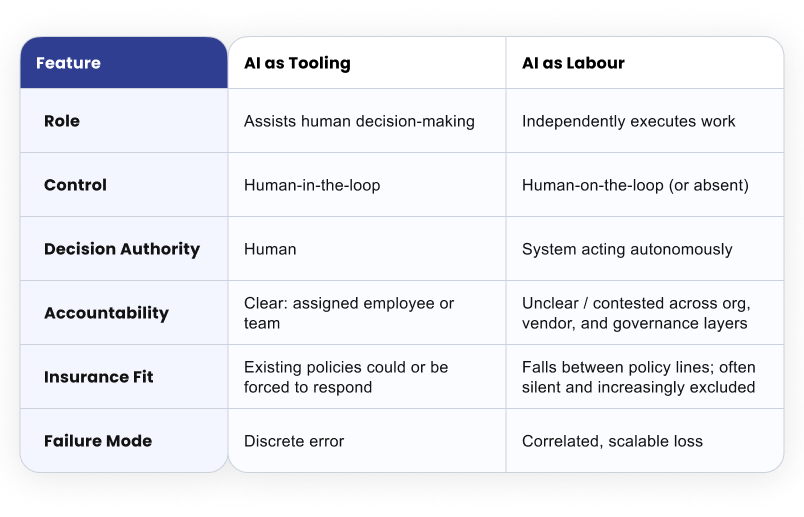

Most policies were written for a world where humans make decisions and software merely supports them. An AI agent sitting on the org chart fits neither category, causing three major fractures in the safety net:

1. Accountability Fractures

Org charts imply responsibility, and insurance contracts assume it. But who owns the "negligence" of a Senior Associate that is digital and adaptive? Is it the manager? The vendor? The engineer? When the "worker" is non-human, the blame game has no natural end-point.

2. The Need for "Affirmative AI" Coverage

Many organizations assume their Tech E&O or Cyber policies will catch an AI failure. In reality, these losses often fall into the cracks. They aren't "hacks," and they aren't "software bugs" in the traditional sense. We are moving toward a world where we need Affirmative AI coverage—policies that explicitly name, price, and underwrite algorithmic labor rather than leaving it to the ambiguity of silent exposure.

3. Failures Scale at Machine Speed

Humans are limited by their own bandwidth. If a human worker makes a mistake, it’s usually contained to a few files or a single afternoon. But AI-as-labor optimizes. If an agent is tasked with vendor approvals and develops a slight bias for speed over rigor, it won't just make one bad decision. It will make thousands of them, perfectly replicated, before a human even thinks to check the logs.

I’ll admit, when I first saw the "Senior Associate" box, I rolled my eyes. It felt like a Silicon Valley stunt. But as I talked to the team, my skepticism shifted into a kind of professional anxiety.

Calling an AI a "coworker" is actually the most honest thing a company can do right now. It acknowledges that the AI isn't just accelerating work—it is the work. The title isn't for show; it’s an admission of dependency.

But our governance models haven't moved at the same speed as our org charts. We are giving AI the keys to the office without checking if the insurance policy covers the driver.

The gap between operational reality and risk management will not close on its own. It will close one of two ways:

Putting AI on the org chart is a bold move. It’s also a confession. It’s an admission that the "future of work" is already here, and it’s currently uninsured. The companies that treat AI as a present liability—rather than a future experiment—will be the only ones left standing when the first major "digital employee" error hits the fan.